Work at NVIDIA Research, Stony Brook University and Adobe Research.

Paper: PDF

Videos: Our research video, Two Minute Papers (thanks!)

Elsewhere: NVIDIA Research Page, Adobe Research Page, NYU Immersive Computing Lab, Li-yi Wei. Morgan Mcguire

Paper Pages: ACM Transactions on Graphics 2018, Semantic Scholar, ResearchGate

Press: Science Daily, Road to VR, Inverse, VRFocus, Innavate, EurekAlert, New Atlas, Digital Trends, The Foundry‘s blog (thanks!)

Collaborators: Qi Sun, Anjul Patney, Li-Yi Wei Jingwan Lu, Paul Asente, Suwen Zhu, Morgan Mcguire, David Luebke, Arie Kaufman.

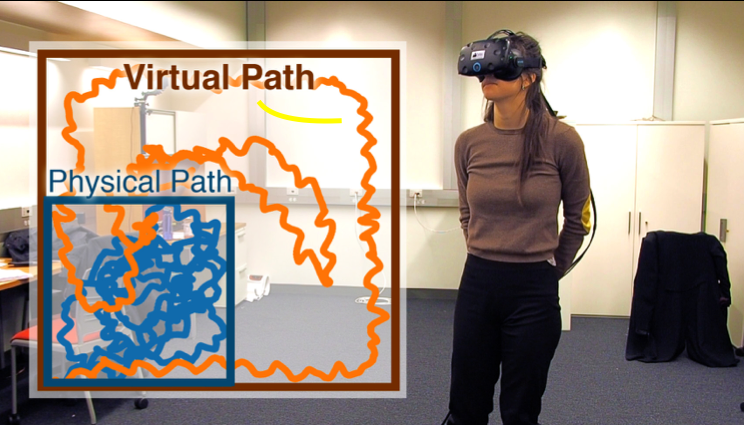

Redirected walking – Tricking a VR user into walking in a safe way without changing their experience – is a challenging task. Some simple ways of doing this, like mapping a camera rotation to movement, cause severe and immediate simsickness, while other less obvious ones are measurable via cognitive load: for example, the time it takes a user to answer a question while being redirected, as opposed to a baseline.

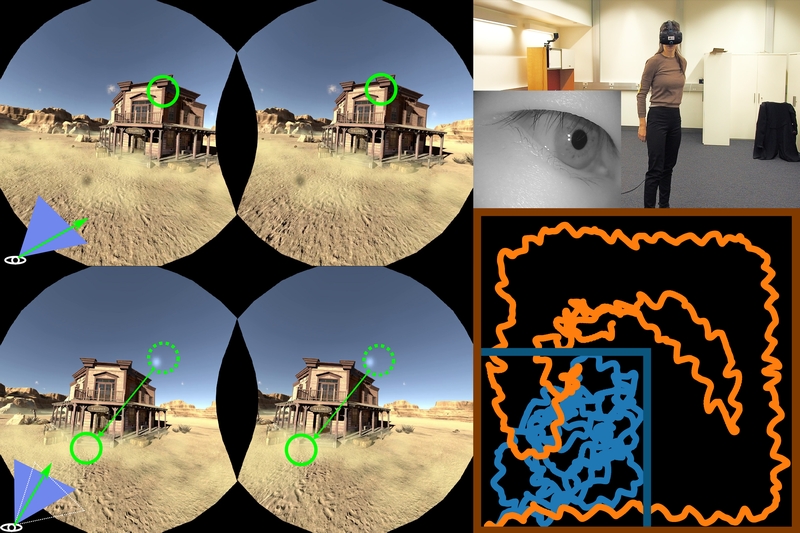

We tried to change this by finding out if a user will notice minor redirections that happen when they’re temporarily blind during major eye saccades. During a saccade, the visual input is suppressed (a mechanism known as “Saccadic Suppression”), so detecting saccades with eye trackers is enough to test this. What’s more – since saccadic suppression lasts hundreds of milliseconds(!), latency from eye trackers is hardly an issue.

Our experiment is fairly straightforward – when a major saccade is detected, perform redirection. One degree at a time turns out to work quite well.

Most redirection techniques are at odds with our proprioception – our sensation of our own body pose – this means that when our vision doesn’t match that sensation, we get simsickness. In our case, the user is nearly blind during redirection, so their visual system doesn’t register the redirection motion as strongly. The experience is almost seamless.

Major credit to David Luebke for blurting out the idea in a meeting, then pushing Anjul and myself to work on it.

BibTeX:

@article{Sun2018Saccade,

author = {Qi Sun and Anjul Patney and Li-Yi Wei and Omer Shapira and Jingwan Lu and Paul Asente and Suwen Zhu and Morgan McGuire and David Luebke and Arie Kaufman},

title = {Towards Virtual Reality Infinite Walking: Dynamic Saccadic Redirection},

journal = {{ACM} Transactions on Graphics},

note = {SIGGRAPH 2018},

month = {August},

day = {15},

year = {2018},

pages = {16},

url = {https://omershapira.com/portfolio/saccadic-redirected-walking-2018/},

}